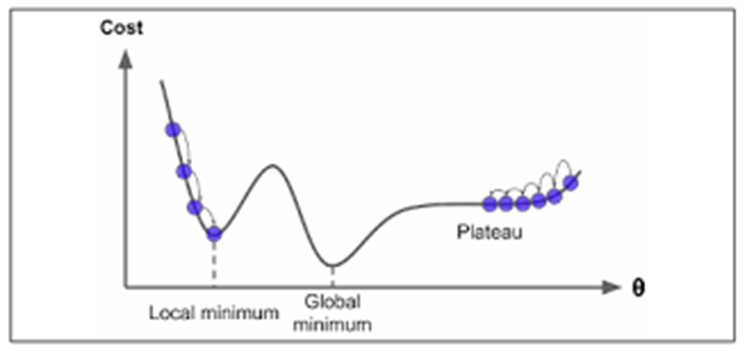

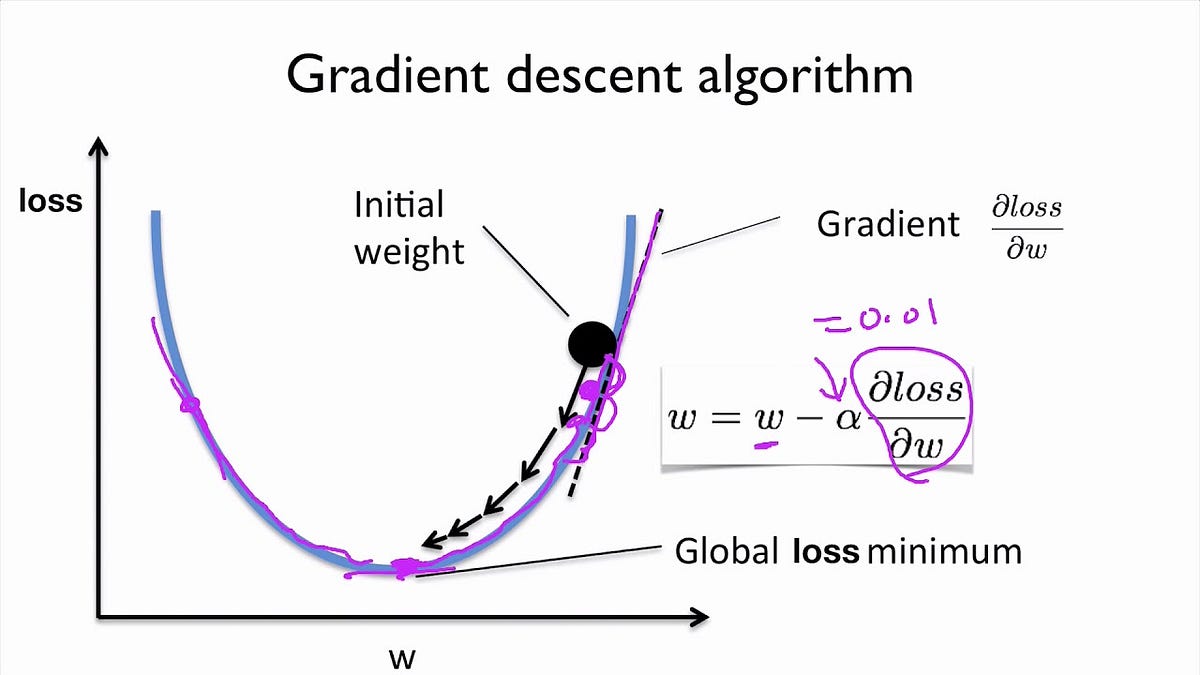

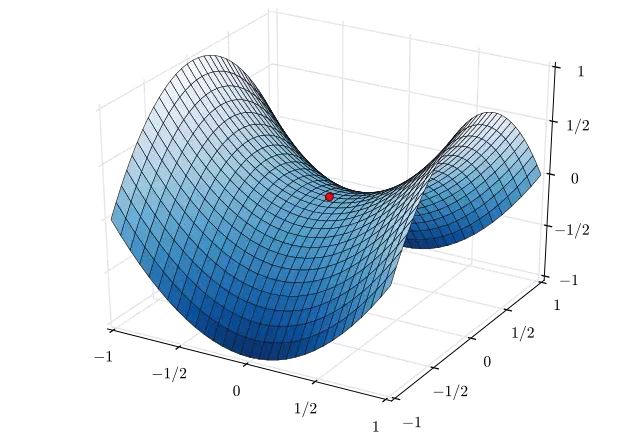

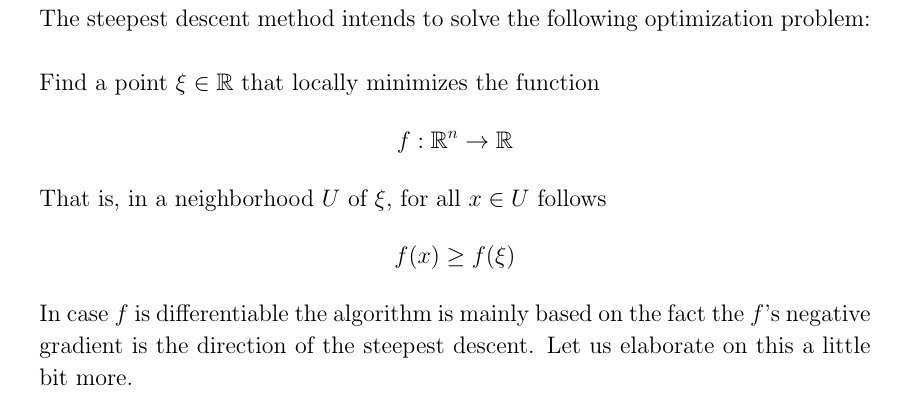

MathType - The #Gradient descent is an iterative optimization #algorithm for finding local minimums of multivariate functions. At each step, the algorithm moves in the inverse direction of the gradient, consequently reducing

Por um escritor misterioso

Last updated 05 junho 2024

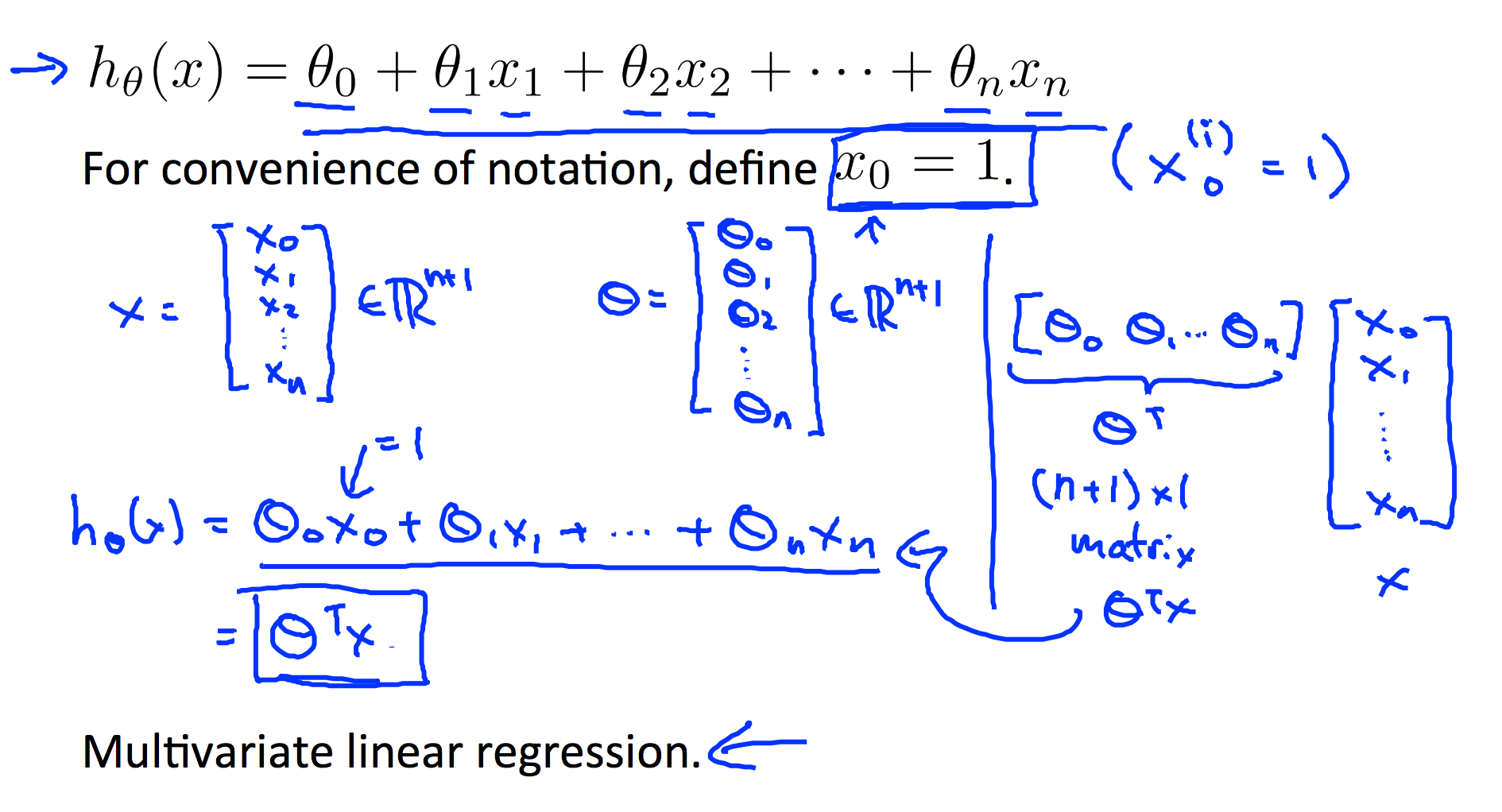

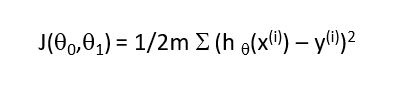

L2] Linear Regression (Multivariate). Cost Function. Hypothesis. Gradient

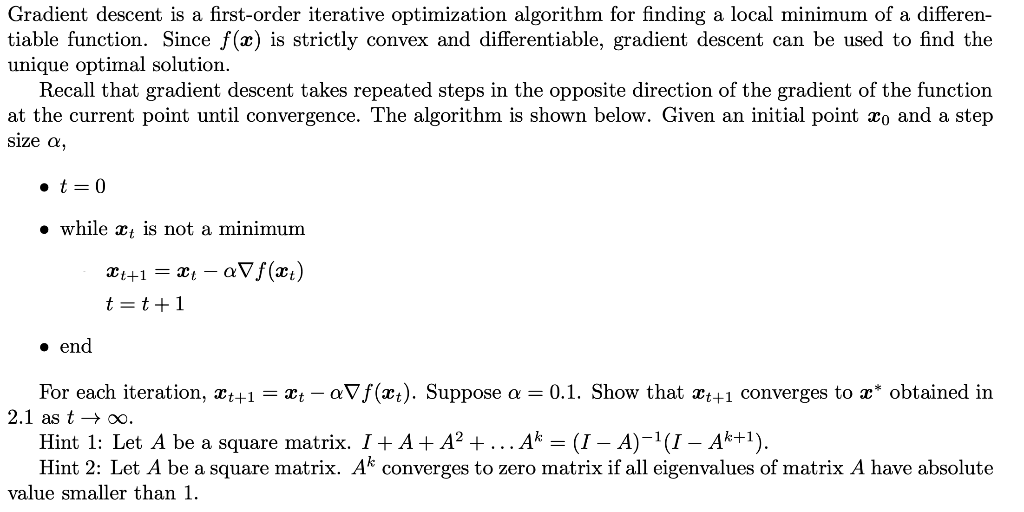

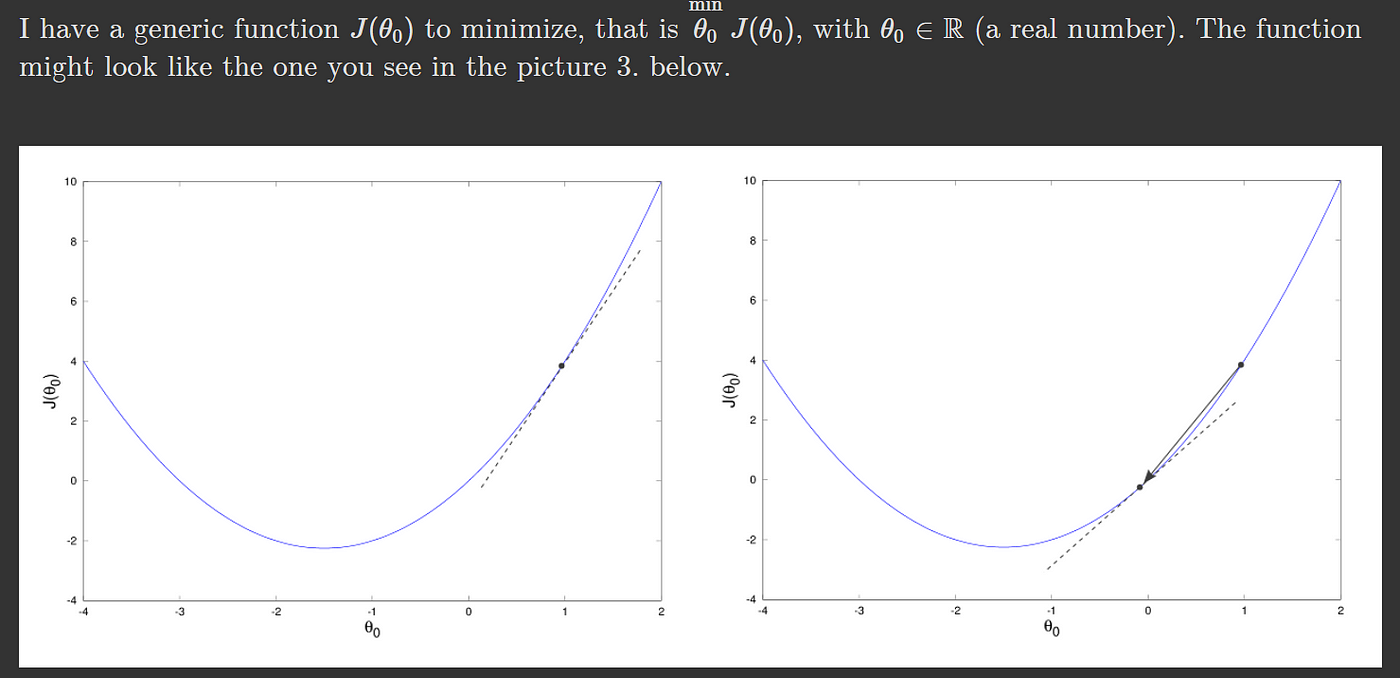

Gradient Descent Algorithm

Solved Gradient descent is a first-order iterative

Optimization Techniques used in Classical Machine Learning ft: Gradient Descent, by Manoj Hegde

Gradient Descent algorithm. How to find the minimum of a function…, by Raghunath D

Can gradient descent be used to find minima and maxima of functions? If not, then why not? - Quora

Gradient Descent algorithm. How to find the minimum of a function…, by Raghunath D

PDF) Finding approximate local minima faster than gradient descent

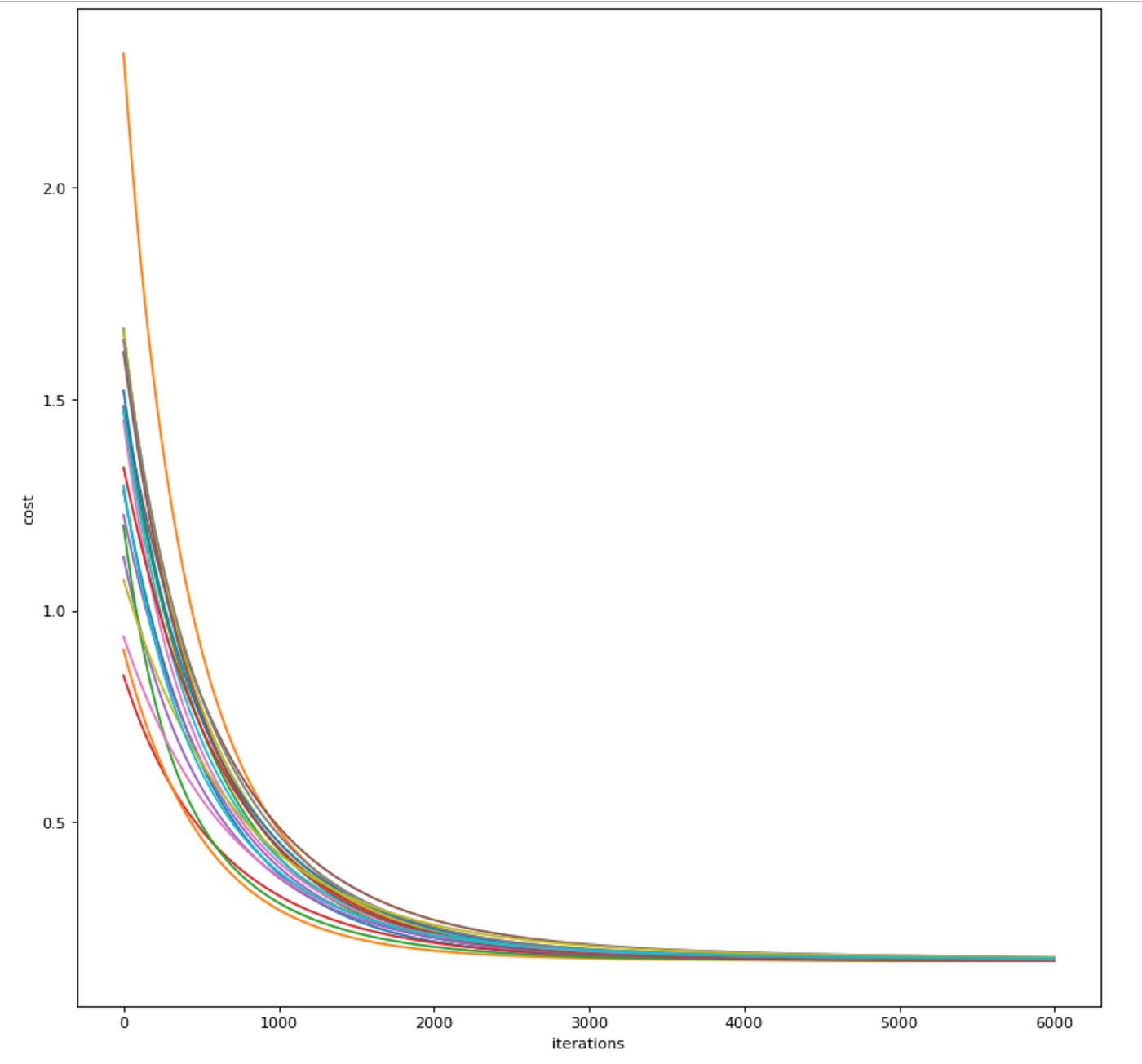

Linear Regression From Scratch PT2: The Gradient Descent Algorithm, by Aminah Mardiyyah Rufai, Nerd For Tech

Gradient Descent Algorithm

Solved] . 4. Gradient descent is a first—order iterative optimisation

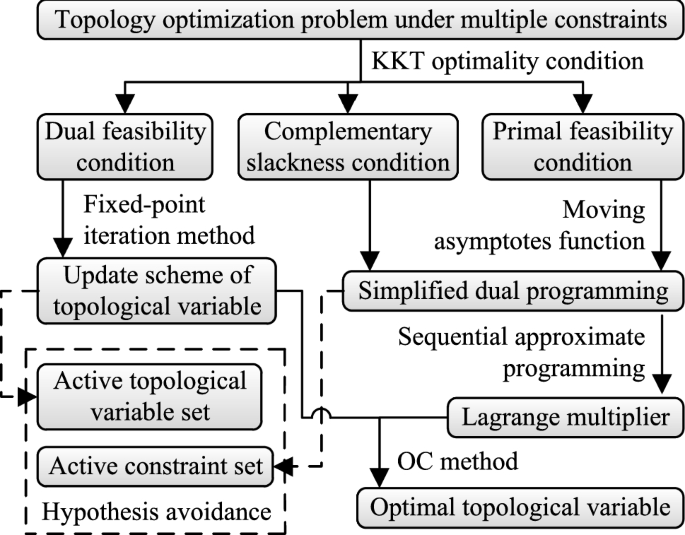

An optimality criteria method hybridized with dual programming for topology optimization under multiple constraints by moving asymptotes approximation

Gradient Descent Algorithm in Machine Learning - Analytics Vidhya

Optimization Techniques used in Classical Machine Learning ft: Gradient Descent, by Manoj Hegde

Gradient Descent algorithm. How to find the minimum of a function…, by Raghunath D

Recomendado para você

-

Steepest Descent Method Search Technique05 junho 2024

Steepest Descent Method Search Technique05 junho 2024 -

Steepest Descent Method - an overview05 junho 2024

Steepest Descent Method - an overview05 junho 2024 -

Implementing the Steepest Descent Algorithm in Python from Scratch, by Nicolo Cosimo Albanese05 junho 2024

Implementing the Steepest Descent Algorithm in Python from Scratch, by Nicolo Cosimo Albanese05 junho 2024 -

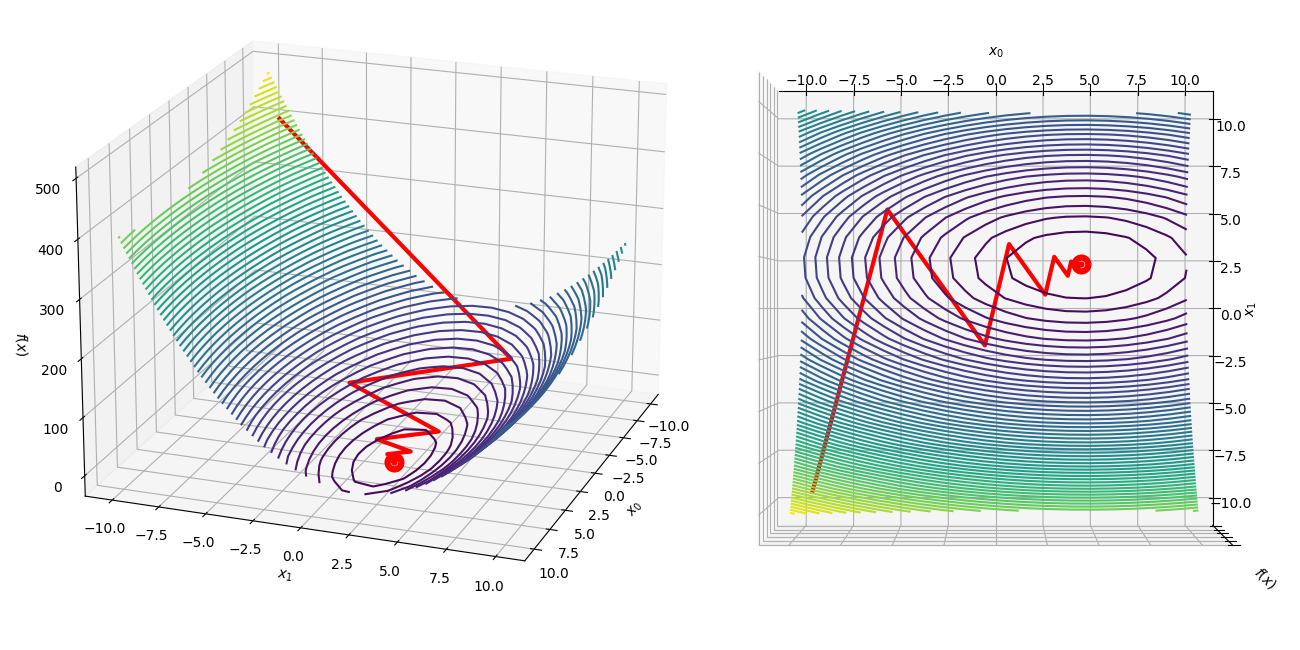

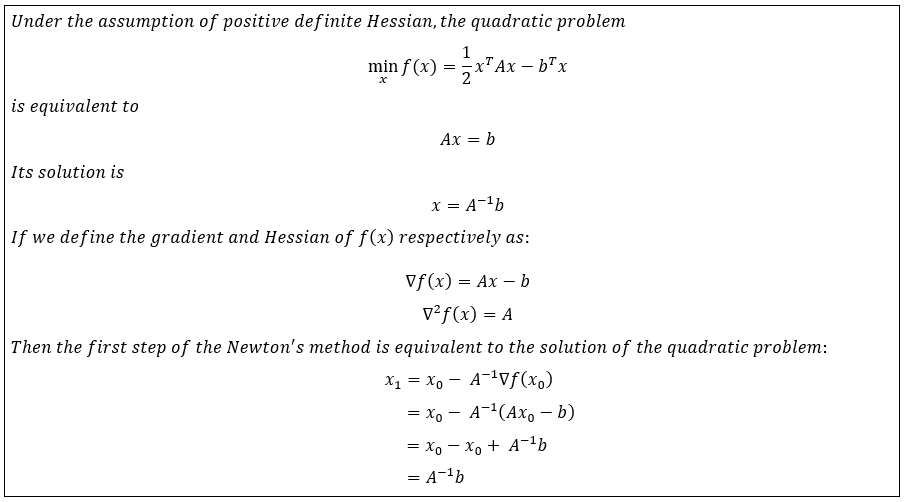

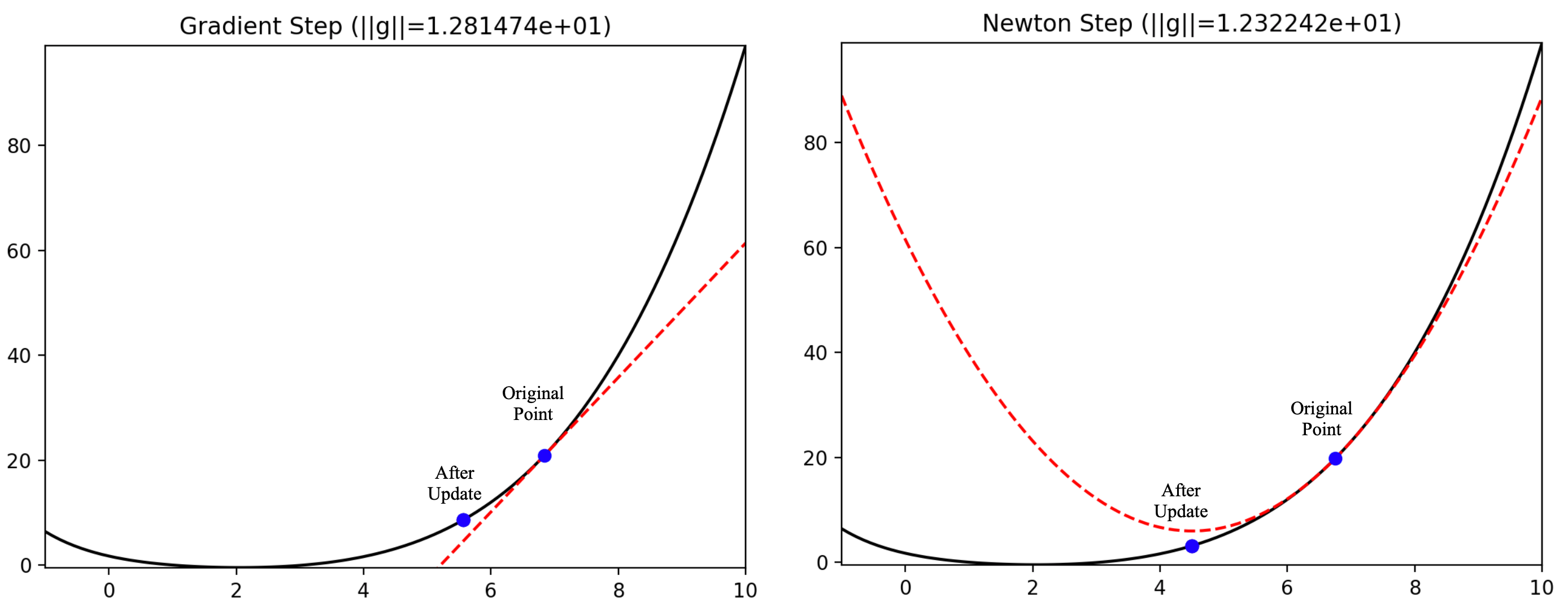

Steepest Descent and Newton's Method in Python, from Scratch: A Comparison, by Nicolo Cosimo Albanese05 junho 2024

Steepest Descent and Newton's Method in Python, from Scratch: A Comparison, by Nicolo Cosimo Albanese05 junho 2024 -

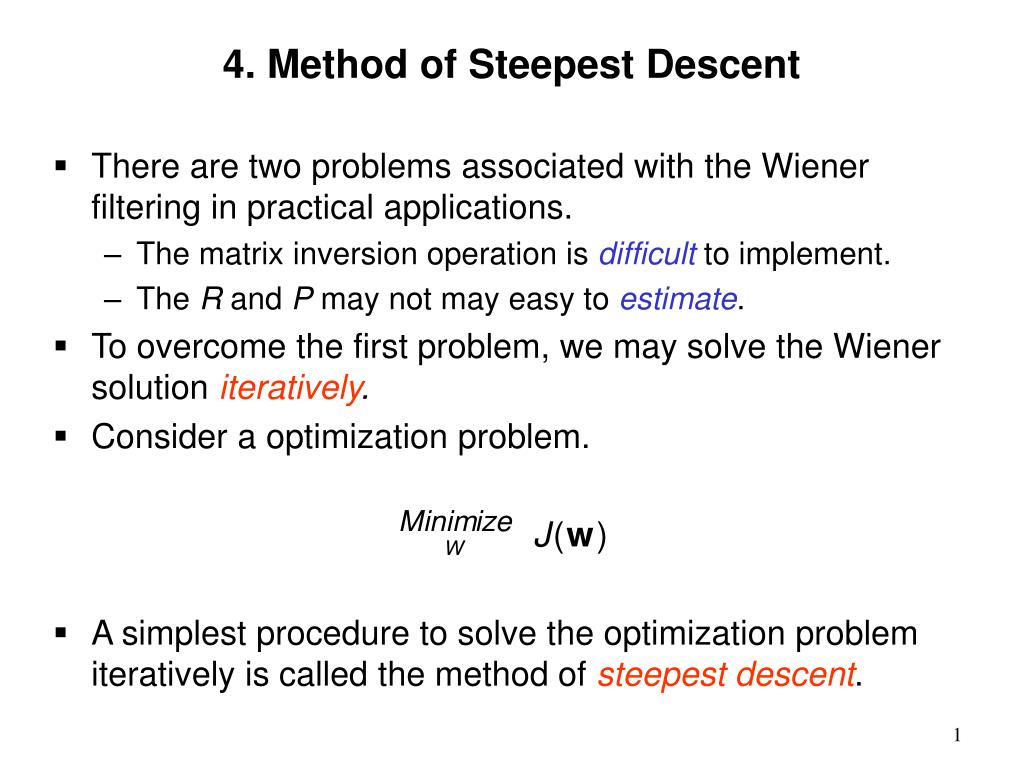

PPT - 4. Method of Steepest Descent PowerPoint Presentation, free download - ID:565484505 junho 2024

PPT - 4. Method of Steepest Descent PowerPoint Presentation, free download - ID:565484505 junho 2024 -

Lecture 7: Gradient Descent (and Beyond)05 junho 2024

Lecture 7: Gradient Descent (and Beyond)05 junho 2024 -

Steepest Descent and Newton's Method in Python, from Scratch: A… – Towards AI05 junho 2024

Steepest Descent and Newton's Method in Python, from Scratch: A… – Towards AI05 junho 2024 -

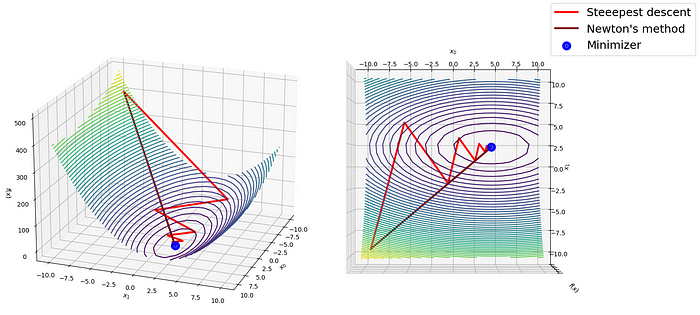

example of steepest Descent (left) and Conjugate Gradient (right)05 junho 2024

example of steepest Descent (left) and Conjugate Gradient (right)05 junho 2024 -

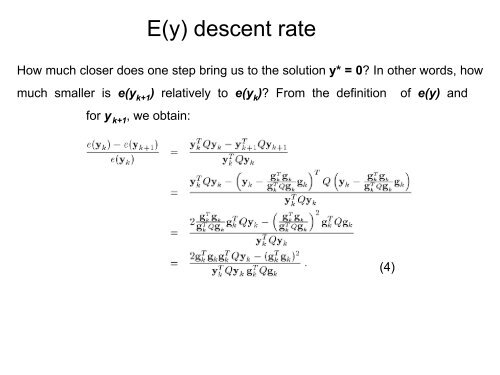

Steepest Descent Rate05 junho 2024

Steepest Descent Rate05 junho 2024 -

The Steepest Descent Algorithm. With an implementation in Rust., by applied.math.coding05 junho 2024

The Steepest Descent Algorithm. With an implementation in Rust., by applied.math.coding05 junho 2024

você pode gostar

-

ebtEDGE by FIS05 junho 2024

ebtEDGE by FIS05 junho 2024 -

Black Clover Episode 171 Return Date05 junho 2024

Black Clover Episode 171 Return Date05 junho 2024 -

PepsiCo's Rockstar unveils 'emboldened new look and attitude05 junho 2024

PepsiCo's Rockstar unveils 'emboldened new look and attitude05 junho 2024 -

KONO DIO DA, Wiki05 junho 2024

KONO DIO DA, Wiki05 junho 2024 -

Demon Slayer: Kimetsu no Yaiba (season 3) - Wikipedia05 junho 2024

Demon Slayer: Kimetsu no Yaiba (season 3) - Wikipedia05 junho 2024 -

Drawing Cute Anime Unicorn Girl Cute cartoon drawings, Anime drawings, Cartoon girl drawing05 junho 2024

Drawing Cute Anime Unicorn Girl Cute cartoon drawings, Anime drawings, Cartoon girl drawing05 junho 2024 -

Match Report Colchester United 3-0 Arsenal - News - Colchester05 junho 2024

Match Report Colchester United 3-0 Arsenal - News - Colchester05 junho 2024 -

My first tattoo : r/lotr05 junho 2024

My first tattoo : r/lotr05 junho 2024 -

Pathfinder Lost Omens Ancestry Guide Review – Roll For Combat05 junho 2024

Pathfinder Lost Omens Ancestry Guide Review – Roll For Combat05 junho 2024 -

Kit Topper Topo De Bolo Feminino Flores Derepente+linda05 junho 2024

Kit Topper Topo De Bolo Feminino Flores Derepente+linda05 junho 2024